Content Filter

What is a Content Filter?

A content filter, commonly referred to as internet filter software, is a powerful tool employed to control and manage access to content online. It serves as a tool for individuals and organizations, including governments, to limit what can be viewed or accessed on the internet, making the digital space appropriate and secure.

How Content-Control Software Functions

Content filters function by using different techniques to monitor and manage user’s content. The filters can be set on various levels, from most general nationwide filtering down to the local and specific filtering for certain organizations or institutions.

The primary purpose of content filters is to stop or restrict exposure to explicit, harmful or inappropriate content. This might include, but will not be limited to, adult content, content with violence, hate speech, or content related to illegal activities.

A content filter uses a mixture of methods to achieve its goal. Such techniques comprise keyword filtering, URL filtering, image recognition and cutting-edge AI algorithms. Such instruments filter and sort the content according to its relevance or appropriateness. These filters achieve this by comparing the content against previously defined rules or databases and then determining if certain websites or pieces of content should be blocked or allowed.

Levels of Content Filtering

Content filtering can be implemented nationally, with governments using their capability to manipulate information access for their people. For example, some countries like China and North Korea have created such a reputation for content censorship that they have placed heavy restrictions on websites, social media platforms, and even search engines.

On the local level, content filters are usually used in various circumstances. They can be used in educational institutions, workplaces, libraries, and for individual and home use. In schools, text filtering is installed so students won’t be able to see objectionable material, and their Internet activities will comply with the school rules. Similarly, workplaces use content filters to enhance production, eliminate distractions, and prevent workers from visiting unauthorized websites during working hours. Libraries also incorporate content filters to ensure their patrons a safe and convenient browsing space.

Terminology and Software Classification

Content-filtering mechanisms are called differently in various contexts and the industry. These programs have many different names and terms, such as web filtering software, internet censorship tools, parental control software and content-control software. Although these words have a little distinct meaning, they all refer to software that assists in content regulation, which comes through the internet.

Methods of Filtering

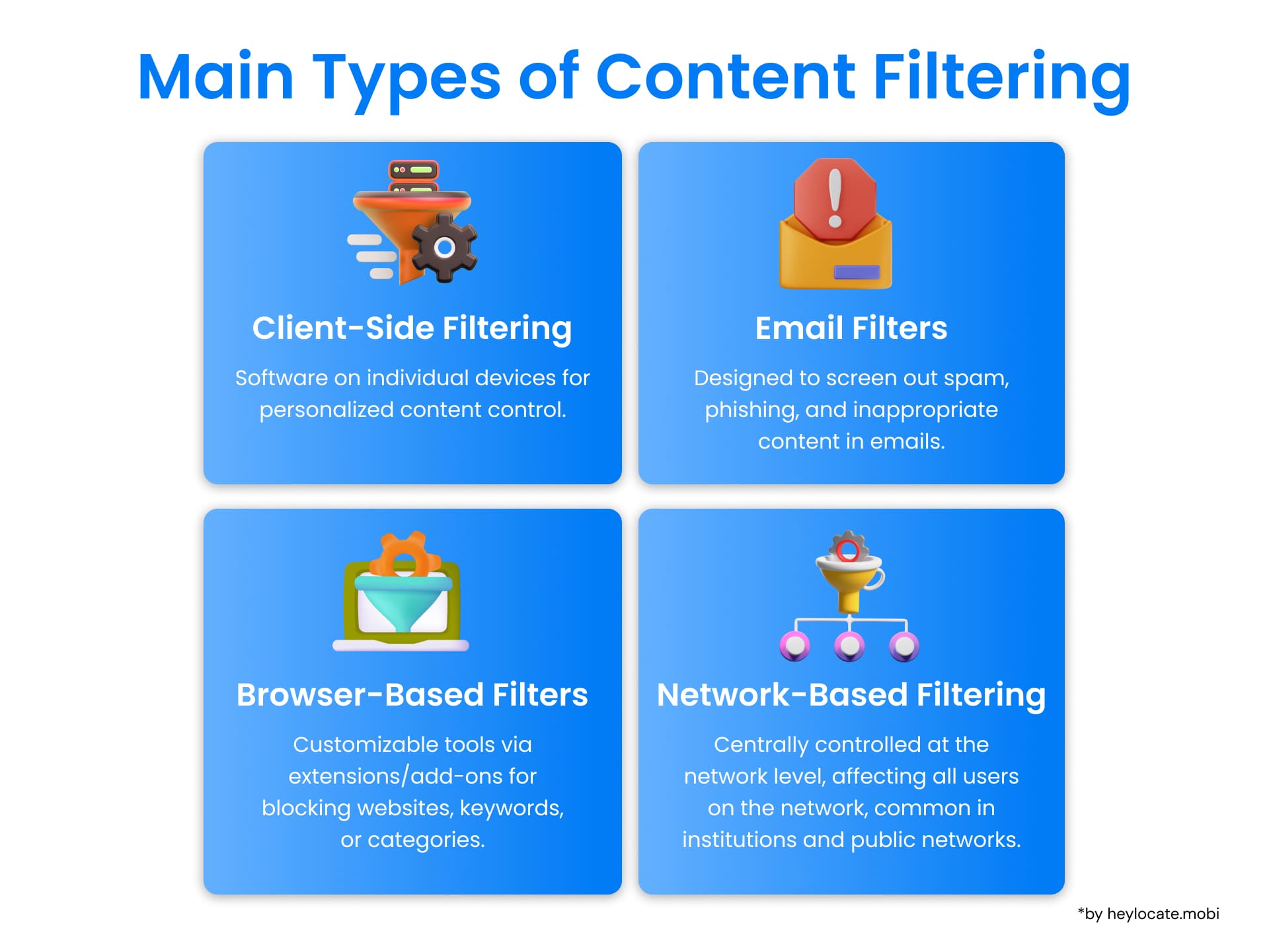

A variety of methods can be employed for filtering, such as browser-based filters, email filters, client-side filtering, and network-based filtering.

- Browser extensions or add-ons are typically used to implement browser-based filters. These features enable users to tailor the content they wish to block or allow as desired. They may be set up to block certain websites, keywords, and even content categories that users prefer. Thus, the control over their browsing has been transferred to them.

- Email filters are created to filter away the non-wanted or malicious content from incoming emails. Such tools can help detect and prevent spam mail, phishing attempts, and even emails containing explicit or anti-social content.

- Client-side filtering points to software launched on particular devices, for instance, laptops or smart devices. Moreover, these kinds of filters create a large degree of freedom for users as they are designed to align with their particular requirements and preferences.

- Network-based filtration instead operates at the network level. This is usually achieved by the network administrators or internet service providers (ISPs). Such filtering is usually implemented in educational institutions, companies and public areas where multiple users share the same network connection. Network-based filters can be more intrusive as they are centrally controlled and apply to all devices connected to the network.

Industry Terms vs.Critic Terms

There is a grey area in terminology between the industry and its critics about content filtering. The industry uses “content filtering” and “internet censorship tools” to represent the software positively, emphasizing its capacity for protecting users from harmful content or abiding by the law.

On the other hand, remarks like “censorship” and “controlling software” are used as warnings of the potential drawbacks of content filters. There are various ways to limit freedom of speech or censor information.

A grasp of the terms and their meaning is the basis of having a level-headed view on this topic. Content filters may be considered both beneficial and controversial, depending on how they might be implemented and the level of control over the content that is filtered.

References

- Wikipedia – Content filtering: Internet filter – Wikipedia

- “Censorware Project”. censorware.net.

- What is Content Filtering? Definition and Types of Content Filters

- Client-side filters. Nationa l Academy of Sciences. 2003.

- What Is Content Filtering? Definition, Types, and Best Practices – Spiceworks

- Content Filtering

- Content Filtering – Glossary | GoGuardian